Finetuning ResNet50 for Art Composition Attributes

My Deep CNN for learning painting composition attributes is based on the paper, Learning Photography Aesthetics with Deep CNNs by Malu et al. For photography, they are training on the aesthetics and attribute database (AADB) which has the following attributes: Balancing Element, Content, Color Harmony, Depth of Field, Light, Object Emphasis, Rule of Thirds, and Vivid Color. The photography principles are quite different from the painting attributes that I proposed last week.

The AADB database was assembled by Kong et al. for their paper, Photo aesthetics ranking network with attributes and content adaptation. You can find the dataset on their project webpage.

ResNet50

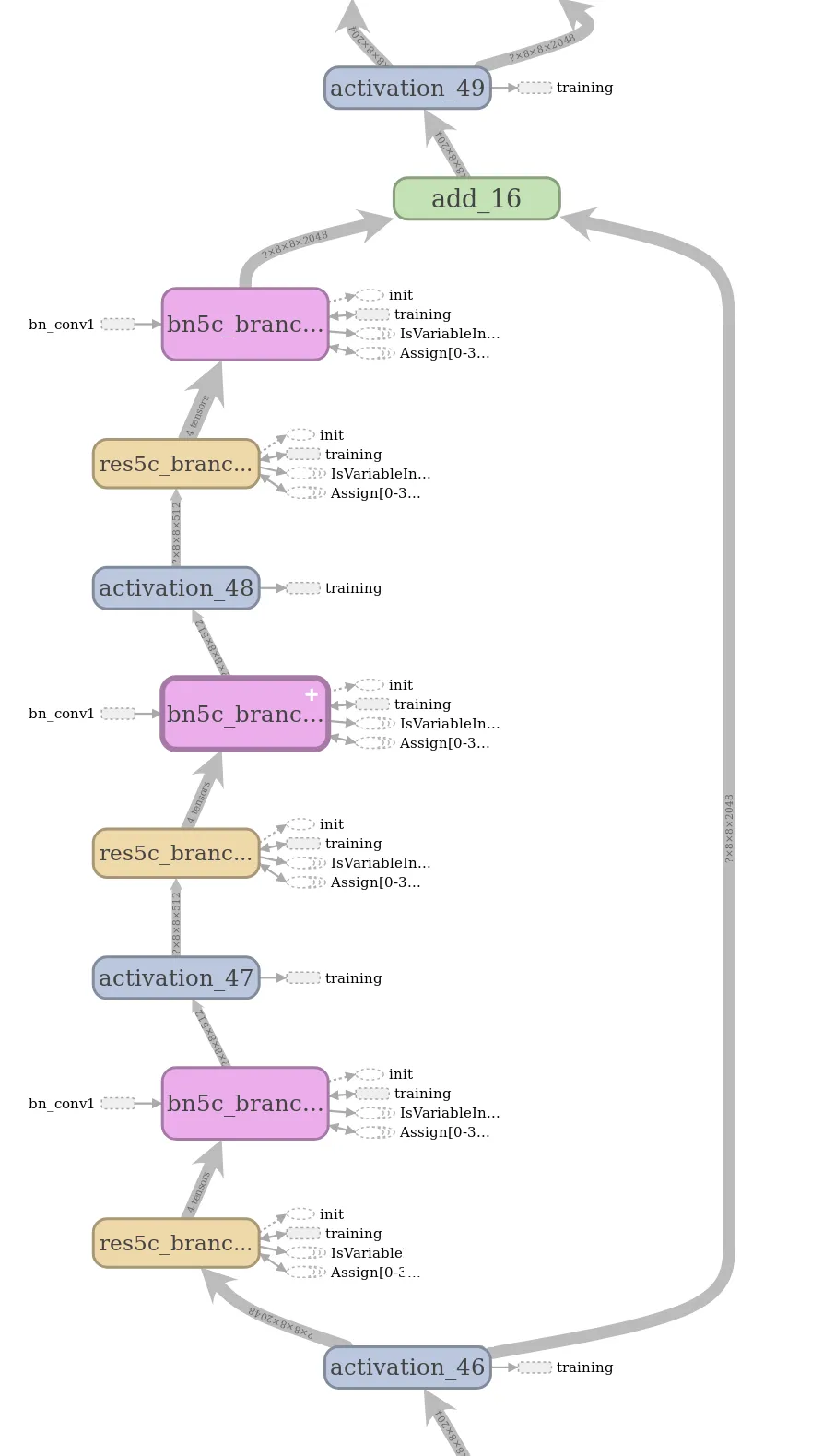

Malu et al’s model fine-tunes a ResNet50 pretrained on the ImageNet dataset. ResNet50 is a fifty-layer deep residual network. There are 16 residual blocks. Each block has three convolution layers, followed by batch normalization, then an activation layer. Here is one block:

ResNet50 + Merge Layer

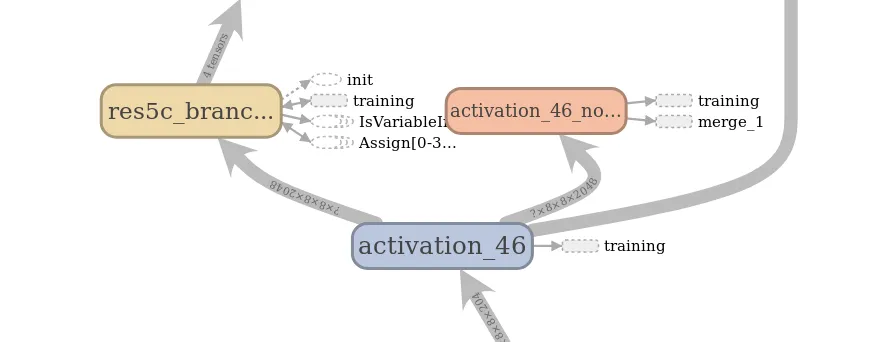

For this model, Global Average Pooling (GAP) is applied to the ReLU output from each of the sixteen ResNet block activations, called the rectified convolution maps. e.g. “activation_46” in the graph below is used to create an “activation_46_normalization” layer:

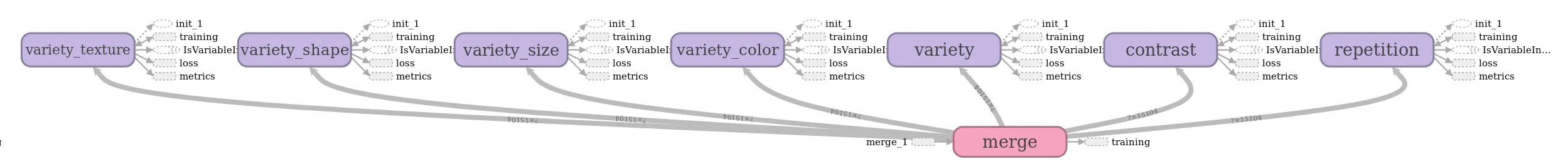

Then, the sixteen “activation_x_normalization” outputs are concatenated and L2 normalization is applied to create a merge layer:

From the merge layer, there are seven outputs, one for each of the attributes:

Attribute Activation Mapping

Malu et al’s paper also outlines how to perform attribute activation mapping by using the rectified convolution maps to apply a heat map which highlights elements that were activated by each attribute. I’ve haven’t yet implemented this part, although I believe that it will be extremely useful.

Here is a great article by Alexis Cook on Global Average Pooling Layers for Object Localization.

Next Week

I’ll continue to label the WikiArt dataset with the painting attributes. I’ll post my code and some initial results from training.